Notes: This part is handled by our team, if you are part of a studio, get in touch with your publishing manager to define the best tests together, and review results.

- There are currently no standard parameter values for each game but we are working on preparing a standard naming convention and will share it soon. In the meantime, please reach out to your publishing manager to validate the list of parameters.

- Only Homa publisher managers can start A/B tests. You will however be able to review the results.

Once the game is ready for N-testing, Homa team members can create A/B tests to determine whether changing different aspects of the game can increase revenue or engagement.

Here are some examples of tests that most game teams run:

- Changing the interval between advertisements

- Changing the price of an item - e.g. skin, bundle, or in-game item like a weapon

- Change the UI/UX or artwork - e.g. make the shop icon a different colour, add visual notifications

Once the test reaches a conclusion, it’s possible to roll out the change to all players.

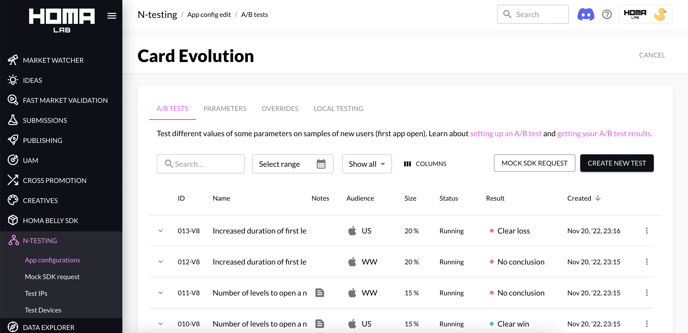

Step 1: Go to the N-Testing project and select the game

- Go to N-testing

- Click on your game

You will see a list of all the A/B tests already done for the game:

Step 2: Create/Set up a test

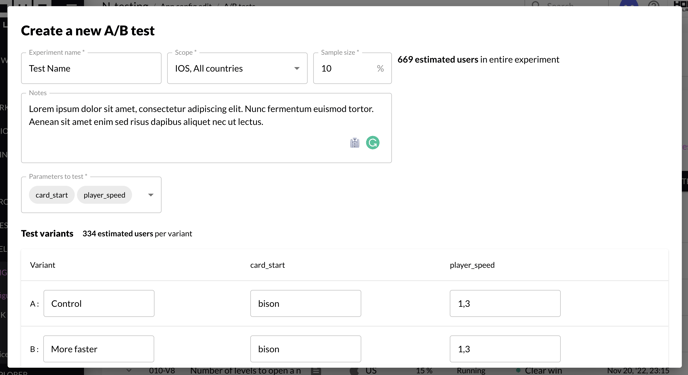

- Click on Create new test and a configuration pop-up will be displayed:

| Name of the value | Description | Guideline |

|

Experiment name |

The name will be displayed in the list of tests. We strongly recommend to use a descriptive name that indicates what’s being tested. |

- Add the scope in the name of the test - e.g. [iOS WW] for iOS Worldwide - Use a clear title to quickly see what you are testing - e.g. Enemy speed |

| Scope | The platform and region of players included in the test. For LTV tests we strongly recommend testing in one country at a time. | - To test different countries it’s necessary to create a different test for each one - e.g. to test US and India separately you’d need 2 tests. |

| Sample size | Proportion, in percentages, of the new users who will be impacted by this test. Users will be randomly allocated to the test based on this target allocation. |

- When creating the test, you will find on the right an estimation of how many new users will be included. For a good sample of users, it’s recommended to have at least 2,000-3,000 users for the test. |

| Notes | Description of the test, which can be used to mention the parameters that were changed or add any mentions you’d like others to be aware of. |

- This field is not mandatory but strongly recommended. - Notes added here you appear in the list of tests, in the Note column. |

| Parameters to test | Choose the parameters that you want to modify (you don’t have any limited for the number of parameters to test). |

- The list of the parameters is displayed only if you have chosen a Scope. - You can add multiple parameters. - When you select a parameter, you will find it below in the block “variant” in order to change the value. - The list of the parameters is handled by the publish manager. |

| Variant |

A variant contains a parameter or a list of parameters. For each variant, you can choose the values you want to test. For example to change the parameter enemy_speed with default value = 4: variant A: enemy_speed = 4 variant B: enemy_speed = 3 variant B: enemy_speed = 2 In this case, from the % of users in the test some will receive variant A, others B, others C, |

- By default, variant A is named control, but you can change this. |

- Click on “Save experiment” to save your changes and configurations.

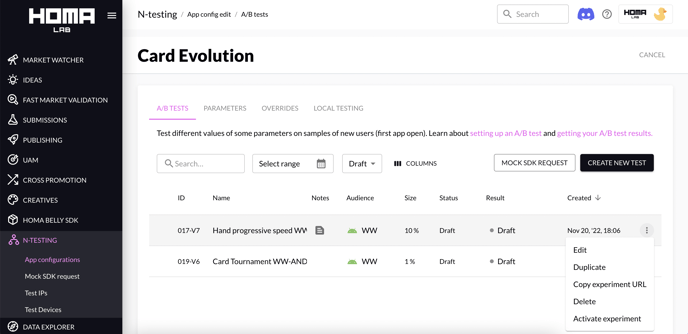

- This will create a Draft experiment which can be activated by clicking on the three dot contextual menu and choosing Activate experiment. This will activate all test variants from the experiment and new users will be assigned to one of the groups randomly based on the sample size.

Guidelines:

- If you have multiple tests which are in “running”, a new player is assigned in only one test

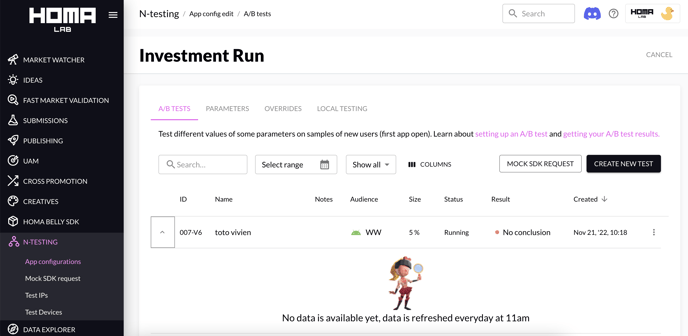

- You will be able to review the first data from your test the day after the activation, but reaching a conclusive result may take a few days.

- You will need about 3,000+ players per variant for the findings of the test to stabilise. Try to choose a sample size that will allow you to reach that amount in a few cohort days, if possible. For that, you should check the number of new users in your app for your chosen scope, and use the day with the lowest installs as the benchmark.

- When you put the scope and the size, if you have an estimated user at 0, it may be because the scope has been recently created, so you could use it a few days later

- You will need to have a minimum of installations in order to have a valid result, if it’s not the case, then you will have a message like below on your test. In order to avoid it, please modify the size to a value lower than the previous one.

Next step: How to read the results of an A/B test